About

Experiences

Education

News

- Jan 2026 Our VideoNSA is accepted by ICLR 2026.

- Nov 2025 Selected (top 10%) to give a talk at the KAUST Rising Stars in AI Symposium 2026.

- Oct 2025 Video-MMLU received the Outstanding Paper Award at the ICCV 2025 Knowledge-Intensive Multimodal Reasoning Workshop, along with a travel grant.

- Oct 2025 We release VideoNSA, a hardware-aware native sparse attention mechanism for video understanding.

- Sep 2025 Invited talk at Lambda AI titled From Seeing to Thinking.

- Sep 2025 One paper accepted by ICCV 2025 KnowledgeMR Workshop.

- Aug 2025 Our paper MovieChat+: Question-aware Sparse Memory for Long Video Question Answering is accepted by IEEE TPAMI.

Selected Publications and Manuscripts

* Equal contribution.

Also see Google Scholar.

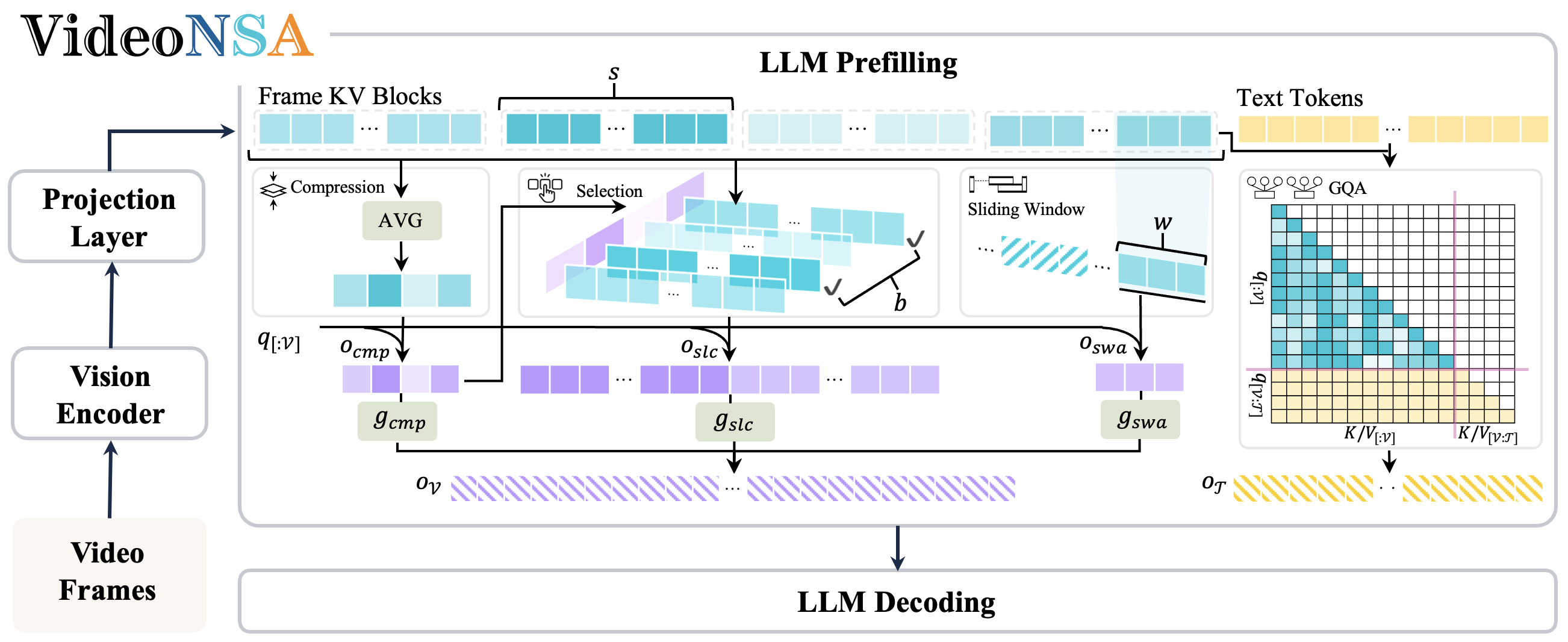

VideoNSA: Native Sparse Attention Scales Video Understanding

ICLR, 2026

VideoNSA delivers hardware-aware native sparse attention primitives for efficient video understanding systems.

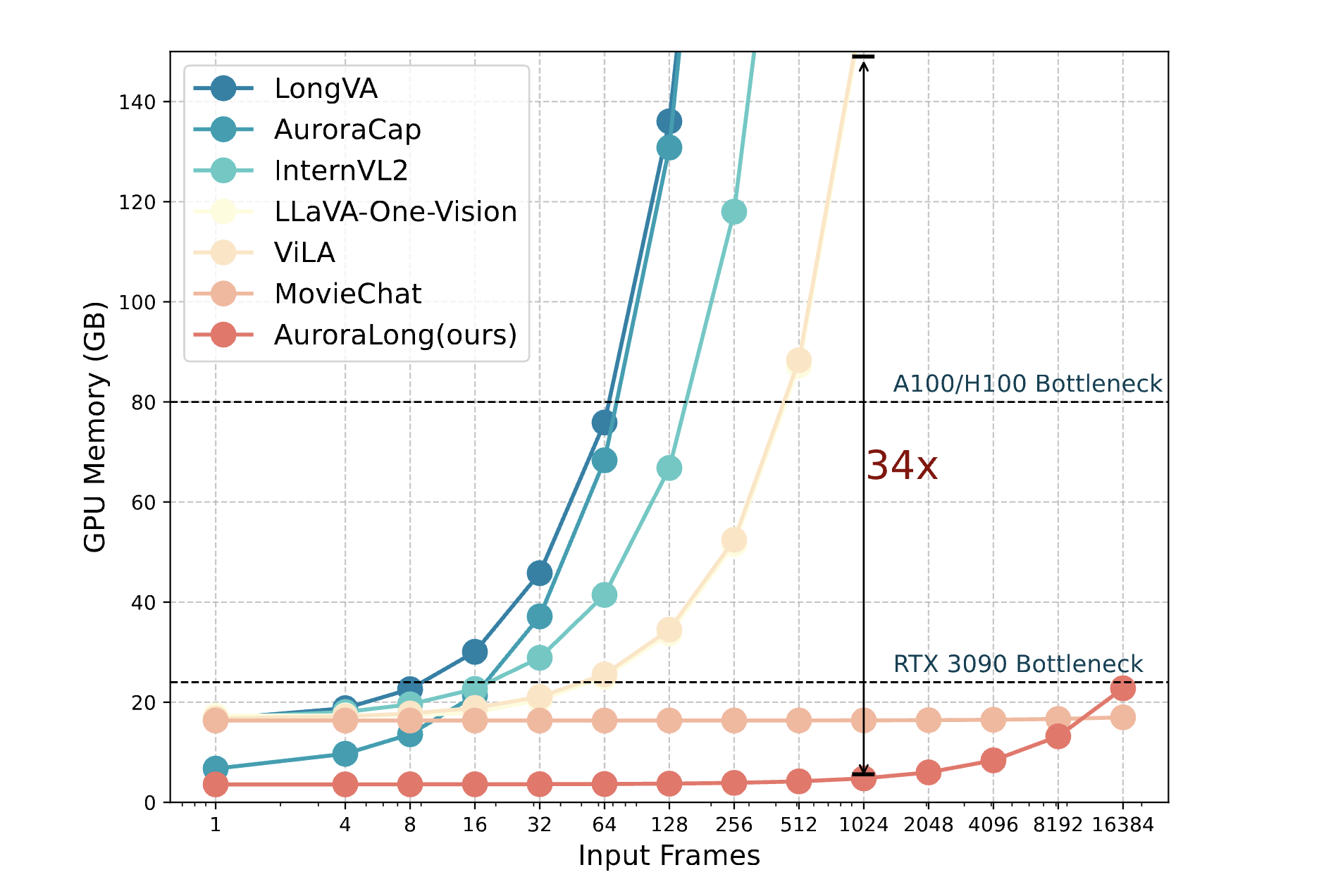

AuroraLong: Bringing RNNs Back to Efficient Open-Ended Video Understanding

ICCV, 2025

Video-MMLU uses a linear RNN language model that handles input sequence of arbitrary length with constant-size hidden states to solve long video understanding tasks.

AuroraCap: Efficient, Performant Video Detailed Captioning and a New Benchmark

ICLR, 2025

AuroraCap is a multimodal LLM designed for image and video detailed captioning. We also release VDC, the first benchmark for detailed video captioning.

MovieChat: From Dense Token to Sparse Memory for Long Video Understanding

CVPR, 2024

MovieChat achieves state-of-the-art performace in extra long video (more than 10K frames) understanding by introducing memory mechanism.

Teaching Assistant

Spring 2024

ECE 445 Senior Design (Undergraduate)

Selected Honors & Awards

- 2026

-

2025

Lambda AI Cloud Credits Grant Sponsorship

-

2025

National Scholarship, Zhejiang University

-

2024

National Scholarship, Zhejiang University

-

2021

National Scholarship, Dalian University of Technology

Top